🙂 About Me

Hello! I’m Qihao (起豪), a master’s student at the School of Computer Science at South China Normal Universty, advised by Professor Tianyong Hao and supported by the TAM Lab. Here is my CV.

My research interests include multimodal representation learning, natural language processing, multilingual lexical semantics, large language/vision-language models. See my Publications (mostly up to date) or Google Scholar page for papers and more information.

I am a travel enthusiast. In the past three years, I have been to Shanghai, Hangzhou, Changsha, Xiangxi Tujia and Miao Autonomous Prefecture, Wuhan, Chaohu, Huangshan, Chengdu, Dujiangyan, Aba Tibetan Autonomous Prefecture, Tianjin, Beijing, Macau, Hong Kong, Seoul, Guilin, Bangkok, Qingyuan, Kunming, Dali, and Lijiang. Traveling around the world is one of my dreams.

I am also one of the founders of the Studio of Empirical Methods in Linguistics (语言学实证思辨坊, LingX) that provides consulting services and technical support in quantitative linguistics, computational linguistics and natural language processing. The studio has designed and launched a series of courses titled Text Mining and Python Programming and has organized online academic training sessions, attended by over 400 teachers and students from various universities. I am the principal instructor for the courses.

Due to some unexpected changes, I missed the 2024-2025 PhD application season. Currently, I am a research assistant at SCNU advised by Professor Hao and pursuing a PhD position for Spring/Fall 2026. I hope to further delve into research I’m passionate about and make greater contributions to the field of artificial intelligence.

🔥 News

- 2025.08.21: 🎉🎉 A co-authored paper is accepted by EMNLP2025 main conference (CCF B)! Congrats to 家乐 and 雪莲!

- 2025.04.29: 🎉🎉 A first-author paper is accepted by IJCAI2025 (CCF A)! See you in Guangzhou!

- 2024.05.30: 🎉🎉 I will be joining The Education University of Hong Kong as a 6-month research assistant from summer 2024, co-advised by Professor Guandong Xu. I would like to thank Professor Tianyong Hao for granting me the opportunity to go abroad for exchange!

- 2024.05.27: 🎉🎉 I am granted a project supported by the Scientific Research Innovation Project of Graduate School of South China Normal University. It earned me an honor!

- 2024.05.15: 🎉🎉 A first-author paper is accepted by ACL2024 main conference (CCF A)! See you in Bangkok!

- 2024.04.15: 🎉🎉 I have arrived in Seoul, South Korea. And I am going to attend the ICASSP2024 conference. Let’s have a wonderful encounter!

- 2024.04.01: 🎉🎉 I pass the interview with the TsinghuaNLP Lab and establish a scientific research collaboration with the excellent researchers from Tsinghua University!

🥇 Honors and Awards

Competition

- First Prize, International Natural Language Processing Competition SemEval-2023 Task 1 - Visual Word Sense Disambiguation (First Author)

- First Prize, FigLang-2024 Shared Task - Multimodal Figurative Language (Second Author)

📝 Publications (Updating)

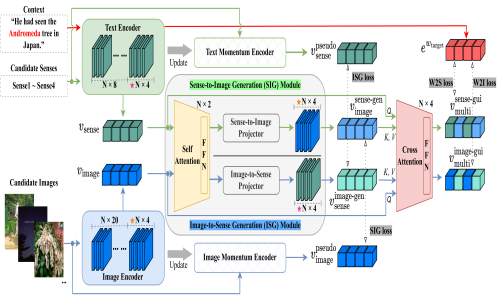

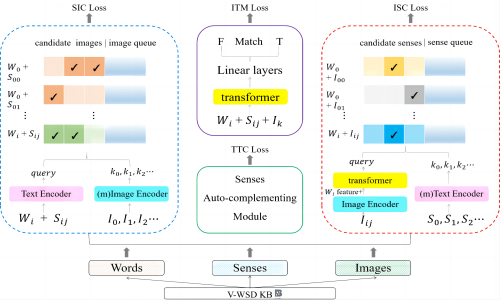

Qihao Yang, Yong Li, Xuelin Wang, Fu Lee Wang (Hong Kong), Tianyong Hao*(corresponding author)

Key words

- Word Sense Disambiguation, Contrastive Learning, Multimodal Learning.

Project

- Supported by the Scientific Research Innovation Project of Graduate School of South China Normal University (Grant No. 2024KYLX090).

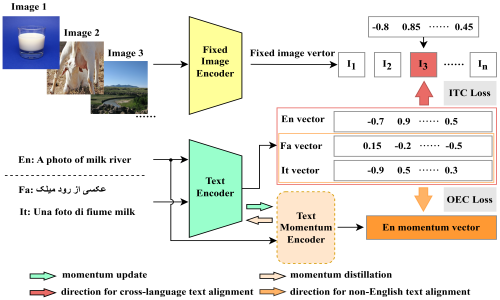

Qihao Yang, Xuelin Wang, Yong Li, Lap-Kei Lee (Hong Kong), Fu Lee Wang (Hong Kong), Tianyong Hao*(corresponding author)

Key words

- Visual Word Sense Disambiguation, Image-Text Retrieval, Multimodal Learning, Cross-lingual.

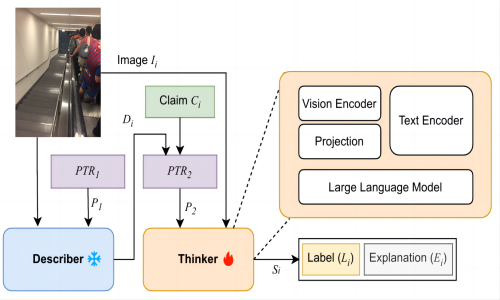

Qihao Yang, Xuelin Wang*(corresponding author)

Key words

- Multimodal Figurative Language, Vision-Language Model, Multimodal Representation Learning.

A Textual Modal Supplement Framework for Understanding Multi-Modal Figurative Language

Jiale Chen, Qihao Yang, Xuelian Dong, Xiaoling Mao, Tianyong Hao*(corresponding author)

Key words

- Figurative Language, Vision-Language Model, Multimodal Fine-tune.

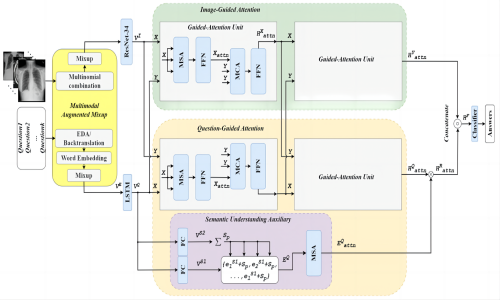

Yong Li, Qihao Yang, Fu Lee Wang (Hong Kong), Lap-Kei Lee (Hong Kong), Yingying Qu*(corresponding author), Tianyong Hao*(corresponding author)

Key words

- Medical Visual Question Answering, Cross Modal Attention, Data Augmentation, Mixup, Multimodal Interaction.

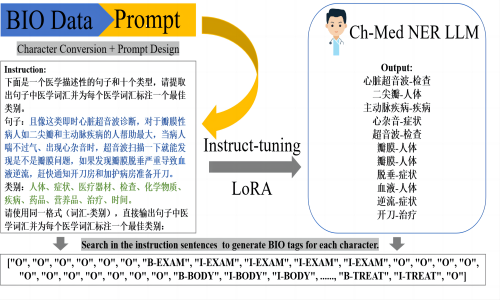

Xuelin Wang, Qihao Yang*(corresponding author)

Key words

- Medical Named Entity Recognition, Entity Extraction, Large Language Models.

Qihao Yang, Yong Li, Xuelin Wang, Shunhao Li, Tianyong Hao*(corresponding author)

(This paper won first prize in SemEval-2023 Task 1 competition, in which 57 teams participated. It earned me an honor and was a good start of my academic career!)

Key words

- Visual Word Sense Disambiguation, Image-Text Retrieval, Contrastive Learning.